The following question was recently asked on the team.

Aggregating data from multiple autonomous services can be approached in many different ways. Driving our architectural choice is the understanding that an autonomous service is responsible for its own data and domain logic. We want services to fulfill their business purpose without failing when other services are unavailable.

In this article I outline the five different implementation strategies listed below.

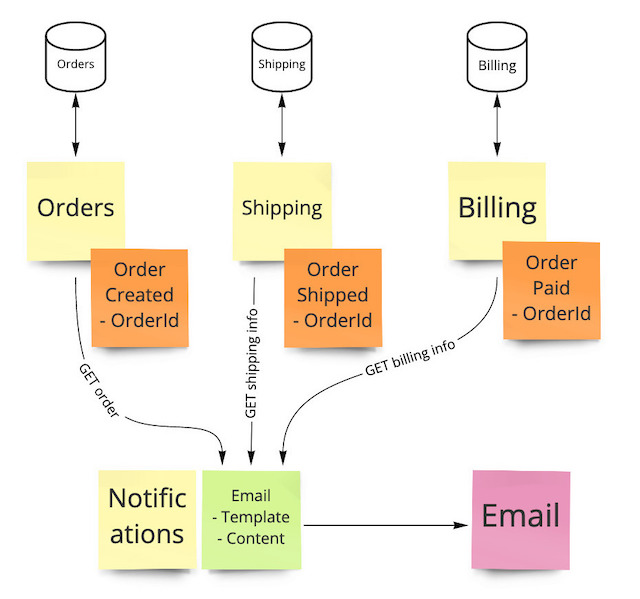

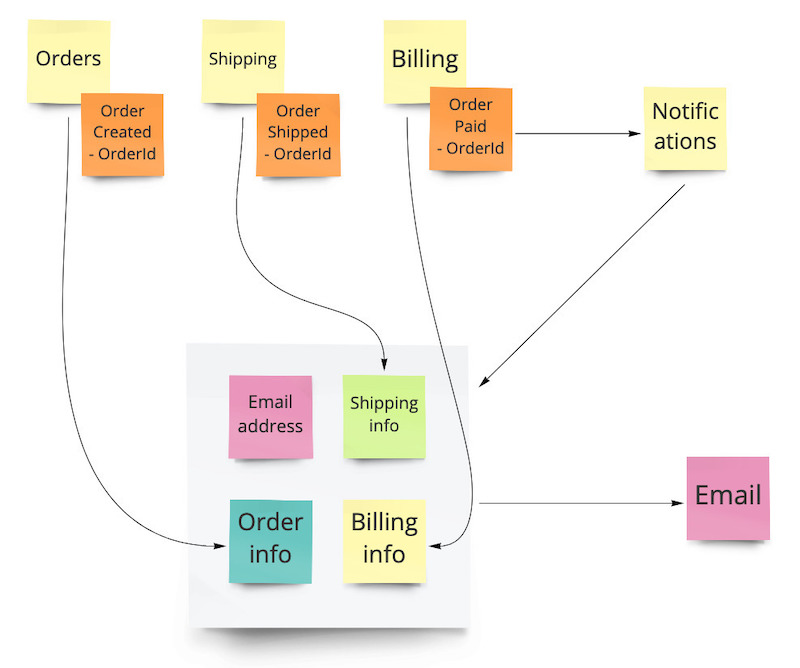

To construct the order confirmation email the notification service would query the orders, shipping, and billings services to fetch the data required for the content of the email. The query responses returned by each service responsible for the data to display is populated into the email template provided by marketing ready for the email to be sent.

This approach is well known and straightforward to implement, however the notification service is tightly coupled and dependent upon the other services. It loses its autonomy because it cannot work if any service providing data is unavailable. The service level is also dependent upon the total latency of all of the other services (if requests are done sequentially) or the slowest service (if requests are done in parallel). This leads to fragile and tightly-coupled chatty services which may require carefully managed deployment processes.

Requiring each service to expose its data also breaks one of the tenets of services that they don’t return data. The notification service may require domain knowledge from orders, shipping, or billing to understand how to display and structure the query data provided by those services.

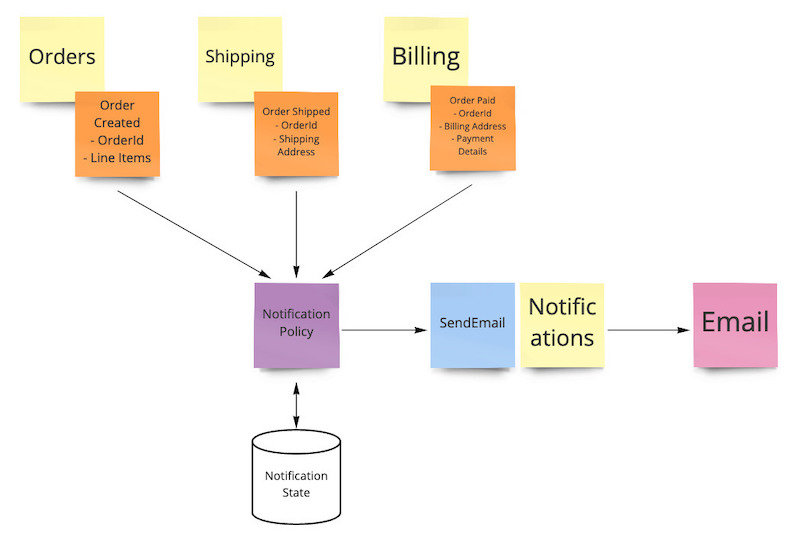

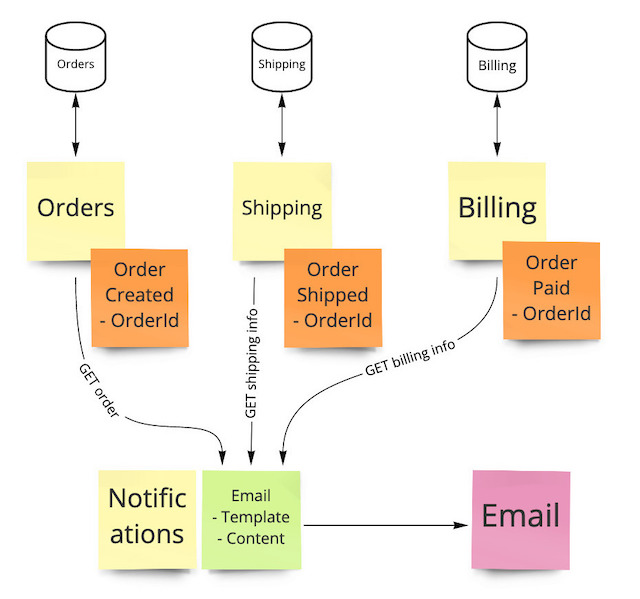

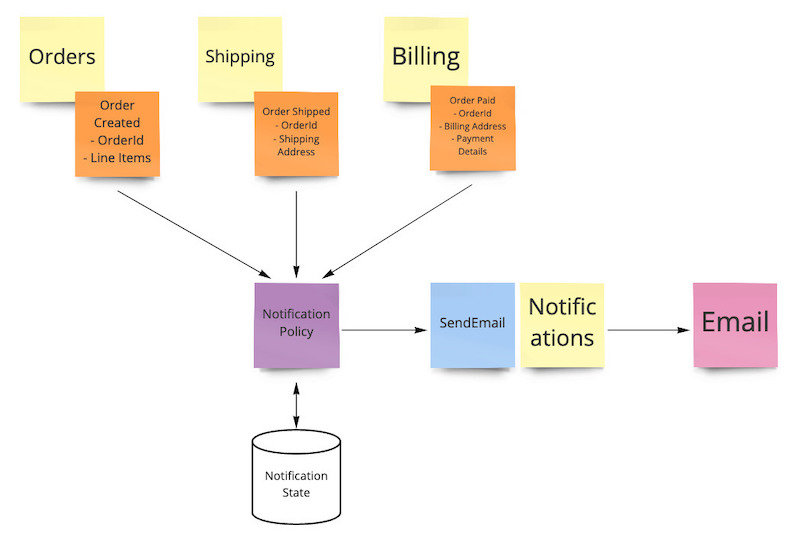

The notification service aggregates data from domain events published by other services into its own data store. With this approach the notification service uses a process manager (notification policy) to consume relevant domain events, such as

The domain events published between services would not be the same events as used for event sourcing. Instead, these external domain events would become part of the service’s public API and have a well defined schema and versioning strategy. This is to prevent coupling between a service’s internal domain events and those it publishes externally – which can be enriched with additional data required by consumers. The microservices canvas is one way to document the events a service consumes and publishes.

Publishing enriched domain events from a service means a consumer doesn’t depend upon the availability of the event producer after an event has been published. The state at the time the event was published is guaranteed, providing a good audit trail, and ensures data is available without additional querying. Integrating services using domain events provides loose coupling between those services. This approach is well suited to asynchronous business operations such as delivering an email.

As a downside, the notification policy may require domain specific knowledge to understand and interpret the domain events it receives. An example would be the business rules around displaying the billing information for an order which might need to include VAT or sales tax and show prices in the desired currency. Instead, this domain logic should reside within the service providing the business capability. Enriching domain events with all the data that any downstream consumers might need could result in unnecessary data being transmitted between services.

After receiving the domain event which triggers the confirmation email to be sent (

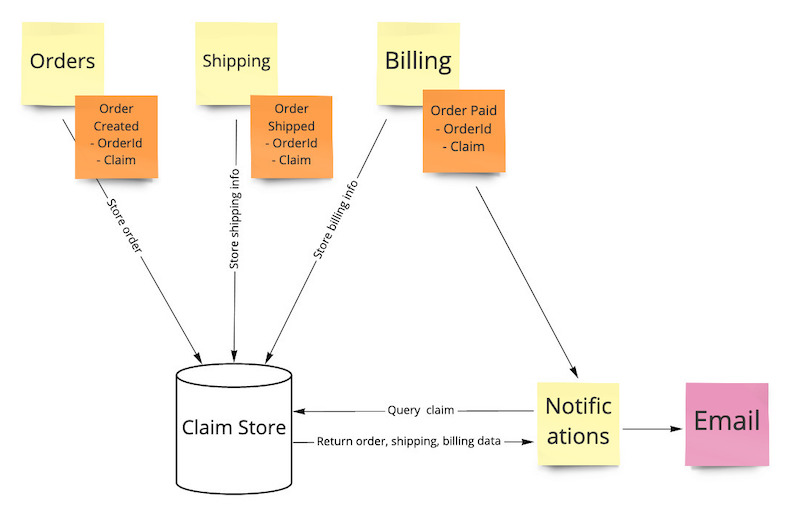

With this approach a service publishes data out to the claim store, rather than providing a query to request its data. The claim store becomes a single point of failure, but this is preferable to depending upon n+1 services to fetch and aggregate the order data. Since the claim store is an immutable document store it can be made highly available and scalable.

As data is stored externally, this approach uses thin domain events containing the minimum required fields, reducing the data size in transit between services. Using the claim store to collate data for an order allows services storing or reading that data to be independently deployed. The schema used for the shared data would need to be used by all services, and carefully versioned to prevent breaking changes.

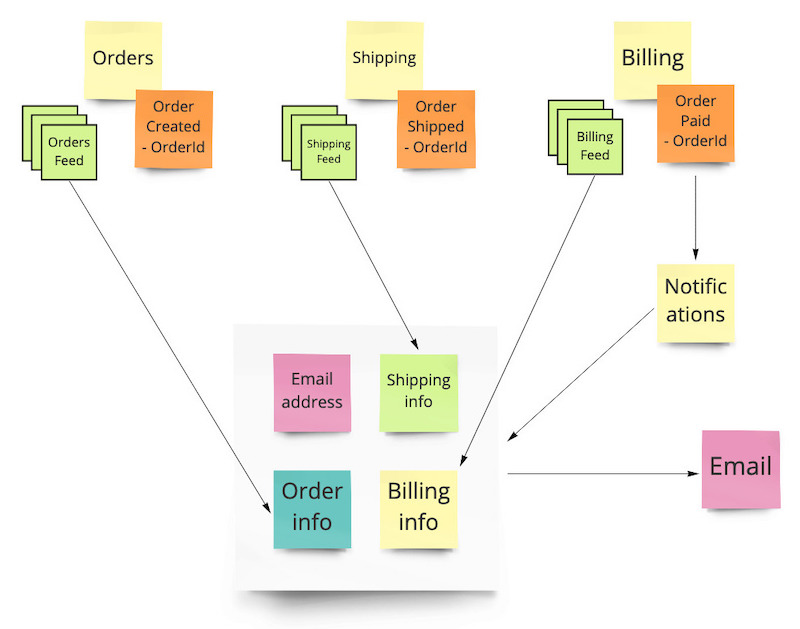

The notification service would subscribe to the relevant feeds produced by the orders, shipping, and billing services to get the data it requires. This data is used to build the email content ready for delivery.

This approach is an alternative to querying services directly by using a standardized protocol and machine-readable format specifically designed for syndication. It similarly suffers from requiring each dependent service to be available for the notification service to work. The consumer’s service level is again affected by each feed’s latency. However, because we’re using a well known protocol the feeds can be made highly available, scalable, and cached using existing HTTP tooling.

The content included in the data feed could be in a format ready to be consumed by the notification service, without requiring any domain-specific processing. As an example the billing service could provide a data feed for an order’s billing details, pre-formatted for inclusion in the email template. This ensures each service is responsible for formatting its own data while adhering to its own business rules, which may be complex.

The notification service would use an email template containing placeholder references to the orders, shipping, and billing services where each service’s content should appear. Hyperlinks could be used for these external service references which are returned as HTML or plain text by the service.

The

As each service is responsible for rendering its part of the order confirmation email, the domain logic and data is contained within its owner service. Only the billing service knows how to format and display various currencies and handle VAT or sales taxes. Only the shipping service has to deal with whether the product has a physical delivery address or whether it’s delivered electronically (e.g. eBooks). The notification service doesn’t need this domain expertise; its role is to render the composite email and deliver it.

Email sending would be affected by any dependent service being unavailable as parts of the email provided by that service wouldn’t be generated. The notification service could choose to gracefully degrade its behaviour by sending an email, but omit the missing content or by waiting until all services are available.

We have an e-commerce application comprised of an order service where orders are placed, a billing service for taking payments, and a shipping service for delivering the order.I’ve taken an Amazon order confirmation email as an example and have highlighted the content provided by each of the four services named above within the email.

We want to introduce a notification service to send emails, such as order confirmation. The confirmation email has a template, provided by marketing, and contains information about the order such as products purchased, delivery address, and payment information.

How do I get the data contained in these separate services without too much coupling?

Aggregating data from multiple autonomous services can be approached in many different ways. Driving our architectural choice is the understanding that an autonomous service is responsible for its own data and domain logic. We want services to fulfill their business purpose without failing when other services are unavailable.

In this article I outline the five different implementation strategies listed below.

Query services

A typical solution to this problem is to add a query API to each service – such as a RESTful endpoint serving HTTPGET requests – to expose the data needed by other services.To construct the order confirmation email the notification service would query the orders, shipping, and billings services to fetch the data required for the content of the email. The query responses returned by each service responsible for the data to display is populated into the email template provided by marketing ready for the email to be sent.

This approach is well known and straightforward to implement, however the notification service is tightly coupled and dependent upon the other services. It loses its autonomy because it cannot work if any service providing data is unavailable. The service level is also dependent upon the total latency of all of the other services (if requests are done sequentially) or the slowest service (if requests are done in parallel). This leads to fragile and tightly-coupled chatty services which may require carefully managed deployment processes.

Requiring each service to expose its data also breaks one of the tenets of services that they don’t return data. The notification service may require domain knowledge from orders, shipping, or billing to understand how to display and structure the query data provided by those services.

“Fat” domain events

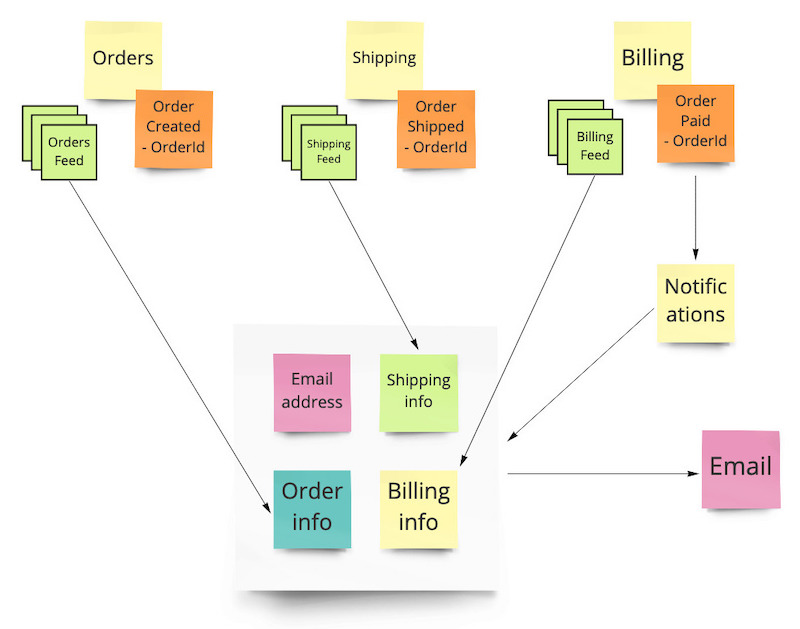

By using a combination of Command-Query Responsibility Segregation (CQRS) and fat domain events – containing all data required by consumers – a service can become more autonomous.The notification service aggregates data from domain events published by other services into its own data store. With this approach the notification service uses a process manager (notification policy) to consume relevant domain events, such as

OrderCreated and OrderShipped. It can dispatch the SendEmail command to deliver the email once it has accumulated all required data. This command would contain all data necessary to populate the content of the email.

The domain events published between services would not be the same events as used for event sourcing. Instead, these external domain events would become part of the service’s public API and have a well defined schema and versioning strategy. This is to prevent coupling between a service’s internal domain events and those it publishes externally – which can be enriched with additional data required by consumers. The microservices canvas is one way to document the events a service consumes and publishes.

Publishing enriched domain events from a service means a consumer doesn’t depend upon the availability of the event producer after an event has been published. The state at the time the event was published is guaranteed, providing a good audit trail, and ensures data is available without additional querying. Integrating services using domain events provides loose coupling between those services. This approach is well suited to asynchronous business operations such as delivering an email.

As a downside, the notification policy may require domain specific knowledge to understand and interpret the domain events it receives. An example would be the business rules around displaying the billing information for an order which might need to include VAT or sales tax and show prices in the desired currency. Instead, this domain logic should reside within the service providing the business capability. Enriching domain events with all the data that any downstream consumers might need could result in unnecessary data being transmitted between services.

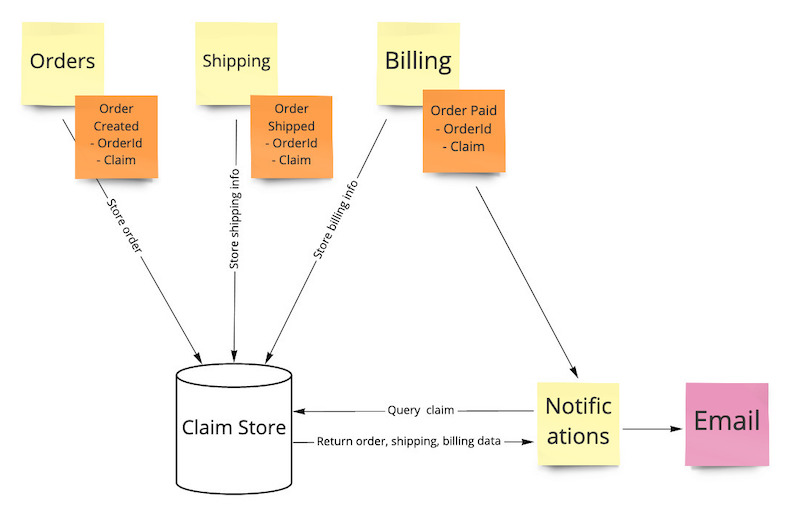

Claim check

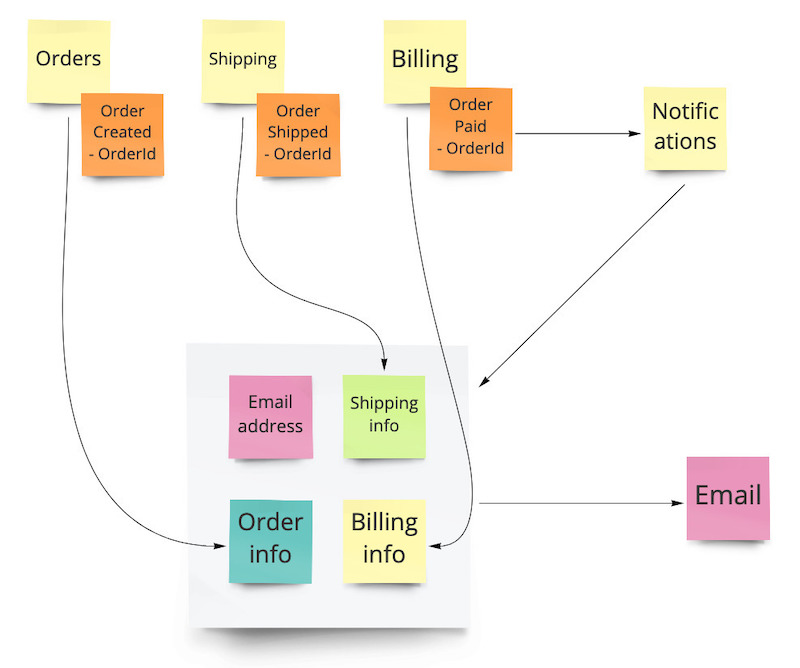

Claim check is described in Enterprise Integration Patterns as a way to:Store message data in a persistent store and pass a Claim Check to subsequent components. These components can use the Claim Check to retrieve the stored information.Each service would store relevant information about the order, shipping info, and billing info into the claim store. The domain events produced during the order’s lifecycle would contain only the order identity and a reference to the claim check. The reference allows any consumer of the event to lookup the related data from the claim store. The claim service could be a document store or basic key/value data store.

After receiving the domain event which triggers the confirmation email to be sent (

OrderPaid in this example), the notification service makes a single request to the claim store for data related to the order. It uses this data to populate the email content before sending.

With this approach a service publishes data out to the claim store, rather than providing a query to request its data. The claim store becomes a single point of failure, but this is preferable to depending upon n+1 services to fetch and aggregate the order data. Since the claim store is an immutable document store it can be made highly available and scalable.

As data is stored externally, this approach uses thin domain events containing the minimum required fields, reducing the data size in transit between services. Using the claim store to collate data for an order allows services storing or reading that data to be independently deployed. The schema used for the shared data would need to be used by all services, and carefully versioned to prevent breaking changes.

Services provide a data feed

To expose its data to consumers a service provides a public data feed. The Atom Publishing Protocol (AtomPub) is one industry standard way of publishing a data feed over HTTP. Services using event sourcing internally can construct the data feed by projecting their internal domain events into a model suitable for publishing. The data feed is contained within the service and exposes a standardized public interface to consumers.The notification service would subscribe to the relevant feeds produced by the orders, shipping, and billing services to get the data it requires. This data is used to build the email content ready for delivery.

This approach is an alternative to querying services directly by using a standardized protocol and machine-readable format specifically designed for syndication. It similarly suffers from requiring each dependent service to be available for the notification service to work. The consumer’s service level is again affected by each feed’s latency. However, because we’re using a well known protocol the feeds can be made highly available, scalable, and cached using existing HTTP tooling.

The content included in the data feed could be in a format ready to be consumed by the notification service, without requiring any domain-specific processing. As an example the billing service could provide a data feed for an order’s billing details, pre-formatted for inclusion in the email template. This ensures each service is responsible for formatting its own data while adhering to its own business rules, which may be complex.

Composite UI

A composite UI can be used to display a web page containing content areas with data owned by and populated from different services. You might use an<iframe> tag in HTML or have the HTML aggregated on the server before sending to the client. This approach can also be applied when displaying data from multiple services in an email template, where parts of the template are populated by different services.The notification service would use an email template containing placeholder references to the orders, shipping, and billing services where each service’s content should appear. Hyperlinks could be used for these external service references which are returned as HTML or plain text by the service.

The

OrderPaid event would trigger the email template to be rendered by the notification service for the order. The placeholder content would be generated by each service for the order and included in the email ready for delivery.

As each service is responsible for rendering its part of the order confirmation email, the domain logic and data is contained within its owner service. Only the billing service knows how to format and display various currencies and handle VAT or sales taxes. Only the shipping service has to deal with whether the product has a physical delivery address or whether it’s delivered electronically (e.g. eBooks). The notification service doesn’t need this domain expertise; its role is to render the composite email and deliver it.

Email sending would be affected by any dependent service being unavailable as parts of the email provided by that service wouldn’t be generated. The notification service could choose to gracefully degrade its behaviour by sending an email, but omit the missing content or by waiting until all services are available.